Introduction

Both the capabilities of Augmented Reality technology, and their application to library services, are likely to improve in the next few years. While these are early days for its application in the library sector, at least some of the definite lines of development are already clear. Thus libraries are in a position to establish the infrastructure, planning, personnel skilling and customer expectations which will remain more or less in place as specific apps and phones develop.

We look at examples of two such services where AR appears set to be integrated into library services.

- Wayfinding: Wayfinding allows the library patron to navigate the library, and find specific collections and shelved items.

- Book selection:Helping patrons make their selection by presenting metadata and other connected information relevant to shelf items, and recommendations of similar items.

These are both services currently provided by librarians to patrons, so we know that it is a service patrons will demand. Moreover these are services where automation through an app can significantly lighten the workload on library staff and free them up for other tasks.

Wayfinding

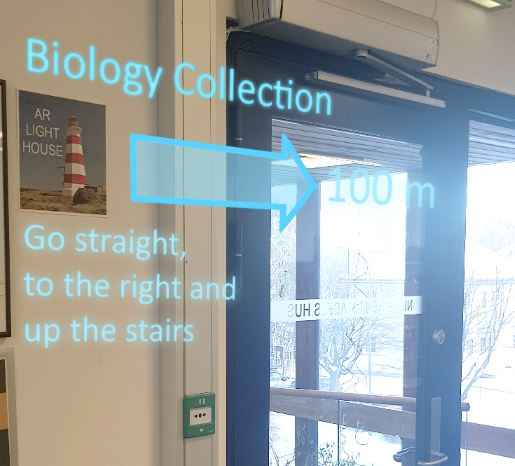

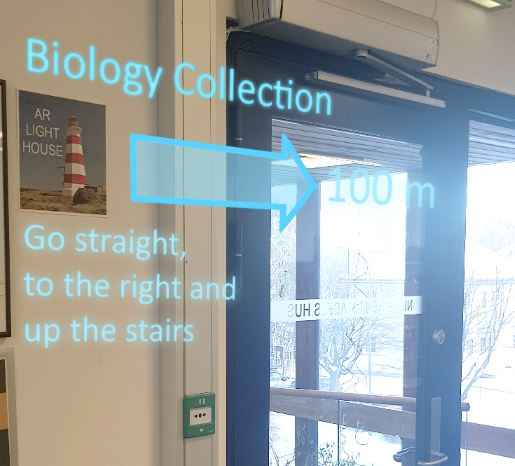

An augmented reality wayfinding app uses the camera of a library patron’s personal mobile device, and superimposes directions on the image. The image below is an example of the type of display the user would face. It is taken from a joint project of Mandal Library and University of Oslo library, funded by the Norwegian National Library. A short video displaying their prototype is located here . Their prototype is built using the Wikitude AR software.

Image: Prototype from ‘AR project @ Mandal Library and University of Oslo library'

One of the decisions for app design and infrastructure planning concerns how the app recognises a location within the library. One option, which does not seem a plausible path at present, is ‘scene recognition’. Here, photos of each main part of building are collected and uploaded to the app, and serve as the dataset against which the app’s artificial intelligence (AI) matches the video input from the app user’s camera. Scene recognition by AI works relatively well for large outdoor scenes, where the unchanging features of the landscape predominate and changes make up a small portion of the visual field.

However, it is not reliable in an indoor setting where the visual field may be dominated by changing features like the presence or absence of people or shifting of portable furniture. The image below illustrates this problem as the AR project team at the Mandal Library found scene recognition unreliable because of shifts in the white trolley and the seated patron.

Image: ‘Scene recognition test’ from ‘AR project @ Mandal Library and University of Oslo library’

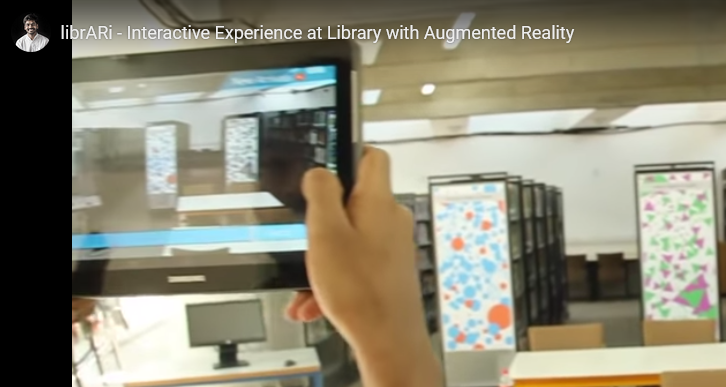

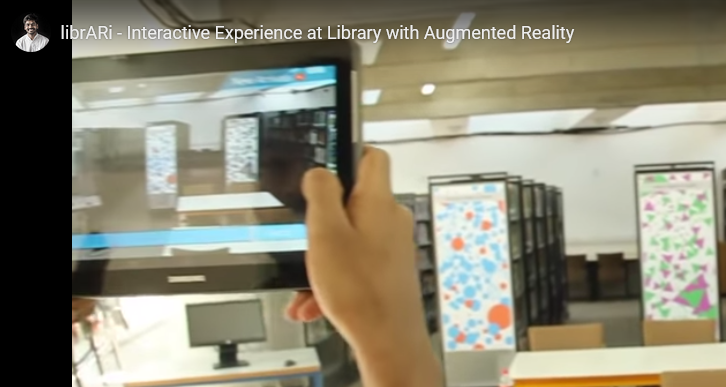

The other option, which seems more workable at present, is through image recognition. This requires that physical printed copies of a high contrast image are affixed around the library as landmarks. The posters have a common theme so that they are easily identified by patrons, but have slight variations at each location which allow the app to recognize the location. In the image below, from a project at the National Institute of Design in India, a prototype AR wayfinding app navigates library shelves by recognizing the images of the posters at the end of each aisle (with orange and blue circles, or purple and green triangles). Here is a video displaying their wayfinder app.

Image: LibrARi app, www.pradeepsiddappa.com

Finally, Bluetooth Low Energy beacons, which are relatively inexpensive, can be placed around the library stacks to signal sub-categories to help the app perform more fine grained location tasks.

Book selection

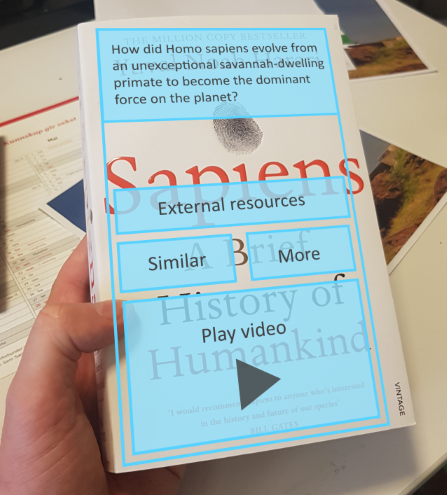

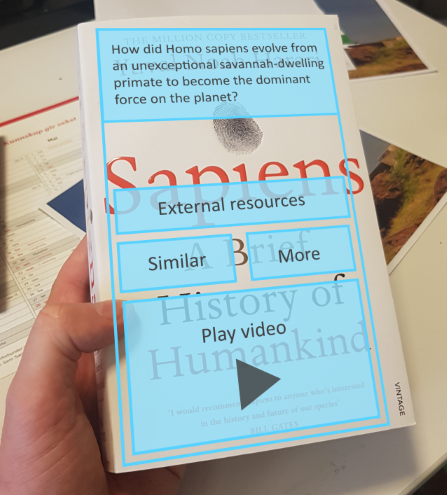

By recognizing a book, the app can superimpose metadata or other connected information. Here is an image of the type of service developers are aiming for. Viewed through the mobile device’s camera, the app superimposes a blurb on the book (top), a link to external resources (like a Wikipedia page if available), a link to similar items in the library (based on subject and author metadata retrieved from the OPAC), a link to play a video (if an author interview, talk, or presentation is associated with the book). Rather than the time consuming task of manually associating this information with each shelved item, the aim is to automate the harvesting of such information through APIs that consult specific sources like the library’s OPAC and Wikipedia.

Image: prototype for ‘AR project @ Mandal Library and University of Oslo library’

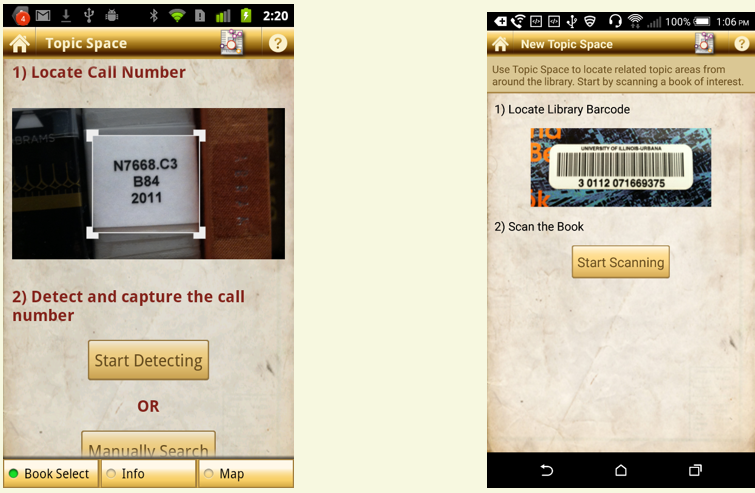

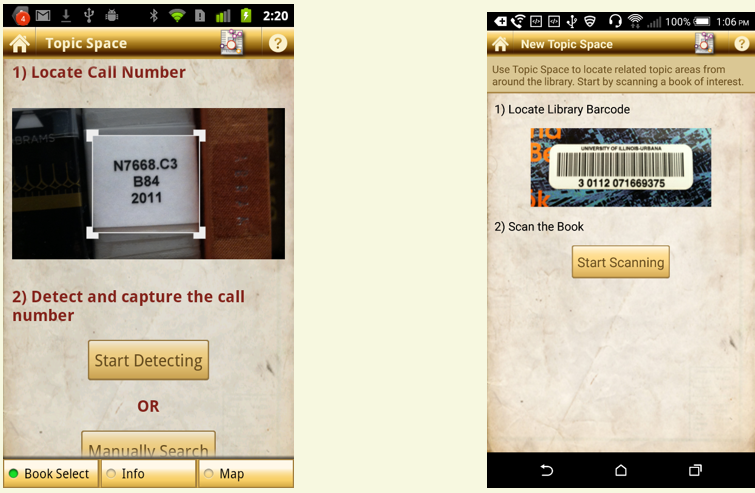

Different methods have been trialled for identifying books. The above image is based on recognizing the book cover from the app’s catalogue of book covers. However this approach is still being improved as apps have been unreliable identifying books under different lighting conditions . Other approaches are to identify the book by its call number using optical character recognition (below left), or by its bar code (below right). These latter options seem to work better at present as the AI performs better in recognizing the high contrast black and white images.

Image source: Jim Hahn, Ben Ryckman, and Maria Lux, 2015.